According to the Drought Monitor, drought conditions improved recently across some of the US. As of Mar. 12, 2013, 47.3% of the contiguous US is experiencing moderate or worse drought (D1-D4) as the 2011-2012 drought extended well into 2013. That is the lowest percentage in a number of months. The percentage area experiencing extreme to exceptional drought increased from 14.6% to 14.7%, but this is ~3% lower than it was three months ago. Percentage areas experiencing drought across the West decreased in the past month as a series of late season cyclones impacted the region. Drought across the Southwest worsened slightly while rain from storms maintained the low-level of drought conditions in the Southeast.

My previous post preceded the series of major winter storm that affected much of the US. In some places in the High Plains and Midwest, 12″ or more of snow fell. With relatively high liquid water equivalency, each storm dropped almost ~1″ of water precipitation, of which the area was in sore need. Unfortunately, these same areas required 2-4″ of rain to break their long-term drought. In other words, while welcome, recent snows have reduced the magnitude of the drought in many areas, but have not completely alleviated them. Ironically, a very different problem arose from these storms: flooding.

Figure 1 – US Drought Monitor map of drought conditions as of April 25th.

If we focus in on the West, we can see recent shifts in drought categories:

Figure 2 – US Drought Monitor map of drought conditions in Western US as of April 25th.

Some relief is evident in the past month (see table on left), including some changes in the mountains as storms recently dumped snow across the region. Mountainous areas and river basins will have to wait until spring for snowmelt to significantly alleviate drought conditions. As you can probably tell, this is a large area experiencing abnormally dry conditions for about one year now.

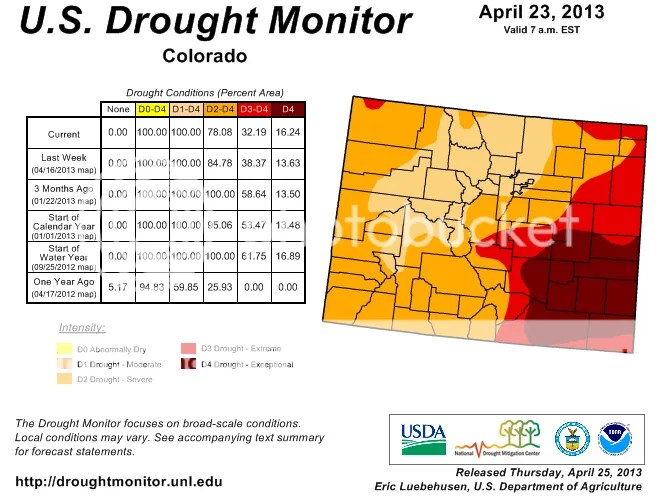

Here are conditions for Colorado:

Figure 3 – US Drought Monitor map of drought conditions in Colorado as of April 25th.

There is some evidence of relief evident over the past three months here. Instead of 100% of the state in Severe drought, only 78% is today. The central & northern mountains, as well as the northern Front Range (Denver north to the border) enjoyed the most relief since February. The percentage area in Extreme drought also dropped significantly from 59% to 38%. Exceptional drought shifted in space from northeastern Colorado to central Colorado while southeastern Colorado remained very dry.

Drought conditions improved somewhat across the southwestern portion of the state in the past couple of weeks. The percentage area that is experiencing less than Severe drought conditions continues to track downward, which is a good sign. Unfortunately, Exceptional drought conditions continued their hold over the eastern plains.

Here are conditions for the High Plains states:

Figure 4 – US Drought Monitor map of drought conditions in the High Plains as of April 25th.

The large storms that moved over this area in the past month reduced the worst drought conditions across Nebraska, South Dakota, and Wyoming. The percentage area with Exceptional drought dropped from 27% to 7%; Extreme drought dropped from 61% to 28%; and Severe drought dropped from 87% to 70%.

With rather significant areas still experiencing moderate or worse drought across much of the US west of the Mississippi River, drought remains a serious concern in 2013. I previously hypothesized that much of the 2012 drought was partly a result of natural climate variability and underlying long-term warming. I wrote about NOAA’s examination into the causes of the 2012 drought a couple of weeks ago in which the authors suggested it was not heavily influenced by long-term warming.

US drought conditions are more influenced by Pacific and Atlantic sea surface temperature conditions. Different natural oscillation phases preferentially condition environments for drought. Droughts in the West tend to occur during the cool phases of the Interdecadal Pacific Oscillation and the El Niño-Southern Oscillation, for instance. Beyond that, drought controls remain a significant unknown. Population growth in the West in the 21st century means scientists and policymakers need to better understand what conditions are likeliest to generate multidecadal droughts, as have occurred in the past.

As drought affects regions differentially, our policy responses vary. A growing number of water utilities recognize the need for a proactive mindset with respect to drought impacts. The last thing they want is their reliability to suffer. Americans are privileged in that clean, fresh water flows when they turn their tap. Crops continue to show up at their local stores despite terrible conditions in many areas of their own nation (albeit at a higher price, as we will find this year). Power utilities continue to provide hydroelectric-generated energy.

That last point will change in a warming and drying future. Regulations that limit the temperature of water discharged by power plants exist. Generally warmer climate conditions include warmer river and lake water today than what existed 30 years ago. Warmer water going into a plant either means warmer water out or a longer time spent in the plant, which reduces the amount of energy the plant can produce. Alternatively, we can continue to generate the same amount of power if we are willing to sacrifice ecosystems which depend on a very narrow range of water temperatures. As with other facets of climate change, technological innovation can help increase plant efficiency. I think innovation remains our best hope to minimize the number and magnitude of climate change impacts on human and ecological systems.